Wednesday, June 28, 2006

8 years ago... Xerox PARC

Posted by

Unknown

at

Wednesday, June 28, 2006

0

comments

![]()

Labels: California, Eelco Kruizinga, innovation, Timo Kouwenhoven, XEROX PARC

Sunday, June 25, 2006

The New New Media

Rank: 23 Kevin Rose (Digg) and Jimmy Wales (Wikipedia)

Why They Matter? Old media is all about reinforcing the importance of the institution as the editorial filter. The new new media is all about the importance of the reader as the editorial filter. Tens of millions of users can create a collaborative intelligence that's far smarter than any one editor could ever hope to be. But the right technology is key -- and that's where Rose and Wales made their mark. Rose created a news aggregator where readers submit articles for consideration and "vote" on which stories should receive prominent placement; the readers' picks automatically create Digg's ever-changing front page. Wales's Wikipedia is user-researched and user-edited, combining timeliness, breadth, and accuracy in a way that traditional encyclopedias simply can't match. Taken together, they symbolize the revolution that's taking place in the way that news and information will be compiled in the years ahead.

Posted by

Unknown

at

Sunday, June 25, 2006

0

comments

![]()

Labels: web 2.0

Search wars are shaping up to be a battle of man vs. machine

Rank: 13 from CNN's list with people who matter now.

CNN says:

CNN says:

(...) Our goal was to identify people whose ideas, products, and business insights are changing the world we live in today - those who are reshaping our future by inventing important new technologies, exploiting emerging opportunities, or throwing their weight around in ways that are sure to make everyone else take notice (...)Stewart Butterfield and Caterina Fake Co-founders, Flickr

Why They Matter? As the creators of Flickr, the phenomenally successful photo/social-networking site, the husband-and-wife team has become the poster couple for the Web 2.0 movement. But that's not why they appear here. Flickr was acquired by Yahoo last year, and now Butterfield and Fake have been deployed to spread some of Flickr's social-media DNA throughout the company. That means finding new ways to emphasize human-powered keyword tagging and filtering, on everything from your own search results to travel itineraries and restaurant reviews.

Incorporating the subjective nuances of human judgment into its search results has become an essential part of Yahoo's strategy to compete against Google, its algorithm-obsessed rival.Thanks to the Flickr kids, the search wars are shaping up to be a battle of man vs. machine.

Posted by

Unknown

at

Sunday, June 25, 2006

0

comments

![]()

Labels: web 2.0

Popular URLs to the lastest buzzzzz (web 2.0)

Popurls.com looks to provide a simple, clean interface for a portal to the “most popular” urls from the hotest sites out there as soon as they break.

The interface is simple, laid out in columns for each of the sites, and full-width areas for the two more graphical of the sites included - flickr.com and youtube.com. For the sites that provide more detail in their feeds than just the title of the post, they make use of the moo.fx library’s effects to slide out the extra content when the mouse is over the topic’s title.

Popurls.com looks to provide a simple, clean interface for a portal to the “most popular” urls from the hotest sites out there as soon as they break.

The interface is simple, laid out in columns for each of the sites, and full-width areas for the two more graphical of the sites included - flickr.com and youtube.com. For the sites that provide more detail in their feeds than just the title of the post, they make use of the moo.fx library’s effects to slide out the extra content when the mouse is over the topic’s title.

The site also refreshes to go out and fetch the latest headlines from each source and updates the page to reflect the updates pulled from the feeds. There’s also the option (on the text feeds) to view “More” of the entries for each list, just in case you missed some.

The site also refreshes to go out and fetch the latest headlines from each source and updates the page to reflect the updates pulled from the feeds. There’s also the option (on the text feeds) to view “More” of the entries for each list, just in case you missed some.

Posted by

Unknown

at

Sunday, June 25, 2006

0

comments

![]()

Labels: web 2.0

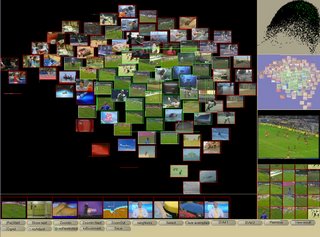

Semantic Video Search Engines (Dutch Research)

A couple of weeks ago I was invited to a series of presentations by several Dutch research projects in the area of semantic media retrieval. The projects CHOICE and MUNCH presented their preliminary results and next steps. Guus Schreiber presented CHOICE together with Luit Gazendam and Hennie Brugman. Arnold Smeulders presented MUNCH with a demo by Cees Snoek: MediaMill semantic video search engine

A couple of weeks ago I was invited to a series of presentations by several Dutch research projects in the area of semantic media retrieval. The projects CHOICE and MUNCH presented their preliminary results and next steps. Guus Schreiber presented CHOICE together with Luit Gazendam and Hennie Brugman. Arnold Smeulders presented MUNCH with a demo by Cees Snoek: MediaMill semantic video search engine

MediaMill

The MediaMill semantic video search engine is bridging the gap between research and applications. It integrates the state-of-the-art techniques developed at the Intelligent Systems Lab Amsterdam of the University of Amsterdam and applies it to realistic problems in video indexing. The techniques employed in MediaMill originate from various disciplines such as image and video processing, computer vision, language technology, machine learning and information visualization. To ensure state-of-the-art competitiveness, MediaMill participates in the yearly TRECVID benchmark.

CHOICE A Thesaurus Browser at Sound and Vision

Over the last few years people at the Netherlands Institute for Sound and Vision spent much effort designing a state of the art thesaurus to support their documentation work, and to populate this thesaurus on basis of several previously existing thesauri and term lists. This thesaurus (the GTAA - Gemeenschappelijke Thesaurus Audiovisuele Archieven) has approximately 160.000 terms in six facets:

- Subjects,

- Genres,

- Person Names,

- Names,

- Makers, and,

- Locations.

The GTAA plays a central role in research and software development within the CHOICE-project. As a pilot project for the CHOICE research program a web application for browsing and searching for relevant terms in the GTAA was built. The user interface was designed to exploit all structure and information present in the thesaurus, or added by CHOICE, in a useful and user friendly manner. Examples are: are broader/narrower term hierarchies, thematic classification of terms, associative relations between terms and cross-facet links. When searching for terms, the use of synonyms and spelling variations provide with additional entry points for searches.

MuNCH

In the MuNCH project MediaMill aims to deliver a working video retrieval system to the Sound and Vision national (video)archive. A first version is planned for at the end of 2006 making use of the engines for video analysis and interaction. In the MuNCH, MediaMill cooperates with Maarten de Rijke on natural language processing, with Guus Schreiber on ontologies of video material, and of course with Sound and Vision, Annemieke de Jong providing the basic material.

Posted by

Unknown

at

Sunday, June 25, 2006

0

comments

![]()

Labels: media retrieval, semantic search

Tuesday, June 20, 2006

New book on AudioVisual Archives

Available from: SAP Publishing

Chapter 3: Catalogues

Available from: SAP Publishing

Chapter 3: Catalogues

- Arnold W.M Smeulders, Franciska de Jong en Marcel Worring, Multimedia information technology and the annotation of video

- Timo Kouwenhoven, Zoeken + Navigeren = Vinden! Over zoekers, zoekgedrag, zoekmachines en hun interfaces bij het zoeken naar audiovisuele content.

- Arjo van Loo, iMMix: multimediacatalogus

Posted by

Unknown

at

Tuesday, June 20, 2006

0

comments

![]()

Labels: archives, audiovisual, book, media retrieval

Wednesday, June 14, 2006

Defining web 2.0 (ex post facto)

An interesting definition of web 2.0 was found on WikiPedia. This definition is a keen ex post facto explanation of the '2.0'-part, that intrigues many of us. I quote:

Many recently developed concepts and technologies are seen as contributing to Web 2.0, including weblogs, linklogs, wikis, podcasts, RSS feeds and other forms of many to many publishing; social software, web APIs, web standards, online web services, and others. Proponents of the Web 2.0 concept say that it differs from early web development, retroactively labeled Web 1.0, in that it is a move away from static websites, the use of search engines, and surfing from one website to the next, to a more dynamic and interactive World Wide Web. Others argue that the original and fundamental concepts of the WWW are not actually being superseded. Skeptics argue that the term is little more than a buzzword, or that it means whatever its proponents want it to mean in order to convince their customers, investors and the media that they are creating something fundamentally new, rather than continuing to develop and use well-established technologies. On September 30, 2005, Tim O'Reilly wrote a seminal piece neatly summarizing the subject. The mind map above sums up the memes of web2.0 with example sites and services attached. It was created by Markus Angermeier on November 11, 2005.What is now termed "Web 1.0" often consisted of static HTML pages that were updated rarely, if at all. They depended solely on HTML, which a new Internet user could learn fairly easily. The success of the dot-com era depended on a more dynamic Web (sometimes labeled Web 1.5) where content management systems served dynamic HTML web pages created on the fly from a content database that could more easily be changed. In both senses, so-called eyeballing was considered intrinsic to the Web experience, thus making page hits and visual aesthetics important factors. Proponents of the Web 2.0 approach believe that Web usage is increasingly oriented toward interaction and rudimentary social networks, which can serve content that exploits network effects with or without creating a visual, interactive web page. In one view, Web 2.0 sites act more as points of presence, or user-dependent web portals, than as traditional websites. They have become so advanced new internet users cannot create these websites, they are only users of web services, done by specialist professional experts. Access to consumer generated content facilitated by Web 2.0 brings the web closer to Tim Berners-Lee's original concept of the web as a democratic, personal, and DIY medium of communication.

Posted by

Unknown

at

Wednesday, June 14, 2006

0

comments

![]()

Labels: web 2.0

The Semantic Gap and web 2.0

After sending out the e-zine through RSS a weekly magazine responded and invited me for an article in the Tech-part. It is published on the 16th of june 2006 in Automatiseringgids.

Posted by

Unknown

at

Wednesday, June 14, 2006

0

comments

![]()

Labels: semantic search, semantic web, social bookmarking, web 2.0

Search Engines and web 2.0: aid or burden?

A short position paper I wrote and published in our corporate RSS news letter.

Posted by

Unknown

at

Wednesday, June 14, 2006

0

comments

![]()

Labels: search engines, social bookmarking, web 2.0